Intelligence on Earth

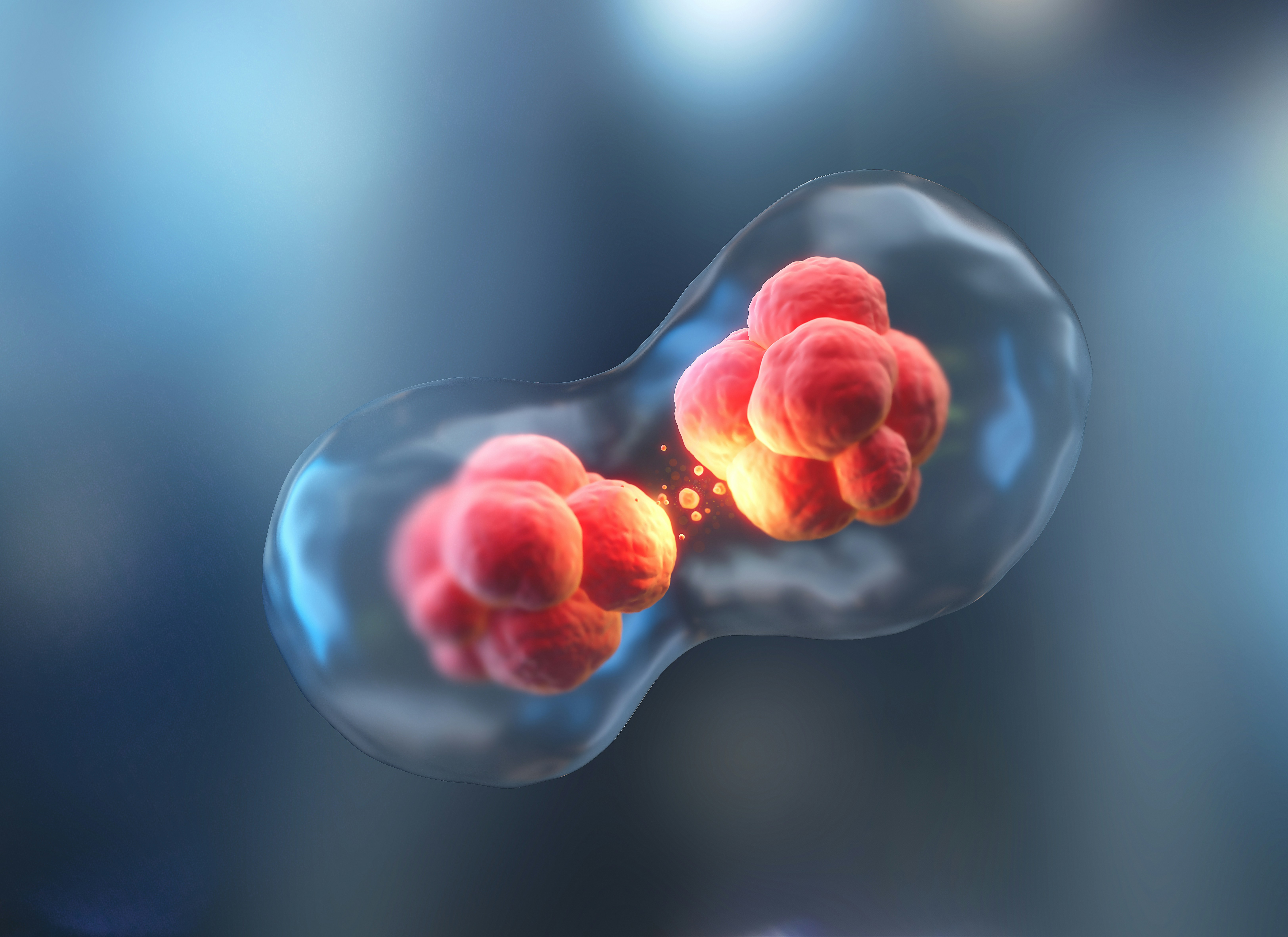

Even a unicellular species possesses intelligence.

Intelligence: Memory that Modifies Behavior

Intelligence is memory that modifies behavior. This simple definition helps us better understand the varied examples of intelligence we observe on earth – including ourselves.

This lens is useful because of what it excludes: in this view, intelligence doesn't require consciousness, intention, or understanding; it doesn't need a brain, or even cells. It simply requires information that persists and influences action.

This works across substrates. We can trace a clear progression of intelligence on Earth – from genetic memory operating across generations, to neural learning within lifetimes, to behavioral and cultural transmission beyond individual experience, to written records that outlast their authors, to electronic systems that process information at scales beyond biological capacity.

Seeing the world through this framework, the single-celled amoeba now appears intelligent. It “knows” how to respond to stimuli like food or danger, ingest food through phagocytosis, and form protective cysts during harsh conditions. Its memory is its genome. It learns by testing out new behaviors through genetic mutation: it remembers behaviors that confer some benefit, while poor behaviors are “forgotten” when the carriers of those genes die before reproducing. The amoeba’s intelligence occurs at the species level (there is also evidence of conditioned behaviors in amoebae).

The Spectrum of Intelligence on Earth

Culture is a special case of this - norms and practices are encoded in experiential memory shared between individuals.

Memory and Computational Economics

As we trace intelligence through its evolutionary stages, we need to understand why memory exists at all.

The answer is computational efficiency. Calculation is expensive – biologically or otherwise; computing everything from first principles, in real-time, is impossibly expensive. An organism that must determine from scratch whether each stimulus represents food or poison will starve while deliberating. A system that re-derives every solution when confronted with a problem will be outcompeted by one that recalls past solutions instantly.

Knowledge is experience compressed into something with predictive power – it’s defined by its utility as a model to anticipate the world around us. Consider the bacterium performing chemotaxis: it doesn't recalculate optimal swimming trajectories from physics equations. Millions of years of selective pressure have encoded the necessary earthly physics directly into its molecular machinery. The computation and learning happened across evolutionary time; the memory allows instant deployment.

The trade-off is universal: memory trades computation for brittleness.

That same bacterium dropped on a planet with different gravity wouldn’t last long – its memory wouldn’t properly reflect the physical properties of its new environment. Memory that is no longer an adequate predictor – due to a change in circumstances in the world since the memory was encoded, for example – is not useful as knowledge anymore. It might save on expensive computation, but doesn’t provide a good model of the world. And that leads to an ineffective (read: dead) agent.

Thus for an intelligent system – of any type – the two most critical questions are:

- How do I determine what knowledge to encode as memory?

- When do I need to re-evaluate, versus relying on existing memory?

Epistemological Intelligence and Metacognition

Given the above, the most important determinant of an intelligence’s success – the solution to the brittleness of memory – is its capacity for epistemological intelligence: taking ownership of its fallibility and reasoning about the reliability of its knowledge.

A system that is aware of why it believes what it does can recognize when those reasons no longer hold. A system that quantifies how confident it is can flag situations where its existing knowledge might be an unreliable guide. A system that understands what would increase its confidence can take actions to look more closely and update its information.

Metacognition is strategic skepticism. Without this, memory can become more sophisticated, but not more reliable.

Cultural practices that once conferred evolutionary advantages to a population can become maladaptive when conditions change – yet persist for centuries if we lack mechanisms for cultural reassessment. Agricultural techniques optimized for historical climate patterns fail catastrophically when the planet warms. Social norms around resource consumption that worked for small populations become existential environmental threats at scale. Biological adaptations that were beneficial in an environment of caloric scarcity cause disease for humans in an environment of abundance. Coping mechanisms one may learn as a child don’t continue to provide benefit in adulthood. And LLMs that architecturally assume their training data represents what is true will speak with a tone of confidence that has no bearing on their accuracy.

The most important determinant of an intelligence's success is not how much it can remember or how quickly it modifies behavior, but whether it can self-evaluate. This is the holy grail for any intelligent system. A metacognitive intelligence must:

- know why it believes what it does,

- be aware of how confident it is or isn't, and

- understand what actions it can take to increase its confidence.

Advanced intelligence examines itself – and thus learns how to learn. This recursive capability distinguishes knowledge from information, science from superstition, and aligned AI from fundamentally untrustworthy systems.

Creating AI capable of this level of introspection and epistemological ownership allows us to build systems that understand they can be wrong. The most trustworthy AI is one that grapples with hard problems the way you and I might. Constructing that helps us get to the next position on the spectrum of intelligence on our planet.